For chemists, the AI revolution has yet to happen

来源:Nature | 发布时间:2023-05-23

Machine-learning systems in chemistry need accurate and accessible training data. Until they get it, they won’t achieve their potential.

Many people are expressing fears that artificial intelligence (AI) has gone too far — or risks doing so. Take Geoffrey Hinton, a prominent figure in AI, who recently resigned from his position at Google, citing the desire to speak out about the technology’s potential risks to society and human well-being.

But against those big-picture concerns, in many areas of science you will hear a different frustration being expressed more quietly: that AI has not yet gone far enough. One of those areas is chemistry, for which machine-learning tools promise a revolution in the way researchers seek and synthesize useful new substances. But a wholesale revolution has yet to happen — because of the lack of data available to feed hungry AI systems.

Any AI system is only as good as the data it is trained on. These systems rely on what are called neural networks, which their developers teach using training data sets that must be large, reliable and free of bias. If chemists want to harness the full potential of generative-AI tools, they need to help to establish such training data sets. More data are needed — both experimental and simulated — including historical data and otherwise obscure knowledge, such as that from unsuccessful experiments. And researchers must ensure that the resulting information is accessible. This task is still very much a work in progress.

Take, for example, AI tools that conduct retrosynthesis. These begin with a chemical structure a chemist wants to make, then work backwards to determine the best starting materials and sequence of reaction steps to make it. AI systems that implement this approach include 3N-MCTS, designed by researchers at the University of Münster in Germany and Shanghai University in China1. This combines a known search algorithm with three neural networks. Such tools have attracted attention, but few chemists have yet adopted them.What’s next for AlphaFold and the AI protein-folding revolution

To make accurate chemical predictions, an AI system needs sufficient knowledge of the specific chemical structures that different reactions work with. Chemists who discover a new reaction usually publish results exploring this, but often these are not exhaustive. Unless AI systems have comprehensive knowledge, they might end up suggesting starting materials with structures that would stop reactions working or lead to incorrect products2.

An example of mixed progress comes in what AI researchers call ‘inverse design’. In chemistry, this involves starting with desired physical properties and then identifying substances that have these properties, and that can, ideally, be made cheaply. For example, AI-based inverse design helped scientists to select optimal materials for making blue phosphorescent organic light-emitting diodes3.

Computational approaches to inverse design, which ask a model to suggest structures with the desired characteristics, are already in use in chemistry, and their outputs are routinely scrutinized by researchers. If AI is to outperform pre-existing computational tools in inverse design, it needs enough training data relating chemical structures to properties. But what is meant by ‘enough’ training data in this context depends on the type of AI used.

A generalist generative-AI system such as ChatGPT, developed by OpenAI in San Francisco, California, is simply data-hungry. To apply such a generative-AI system to chemistry, hundreds of thousands — or possibly even millions — of data points would be needed.

A more chemistry-focused AI approach trains the system on the structures and properties of molecules. In the language of AI, molecular structures are graphs. In molecules, chemical bonds connect atoms — just as edges connect nodes in graphs. Such AI systems fed with 5,000–10,000 data points can already beat conventional computational approaches to answering chemical questions4 . The problem is that, in many cases, even 5,000 data points is far more than are currently available.Artificial intelligence in structural biology is here to stay

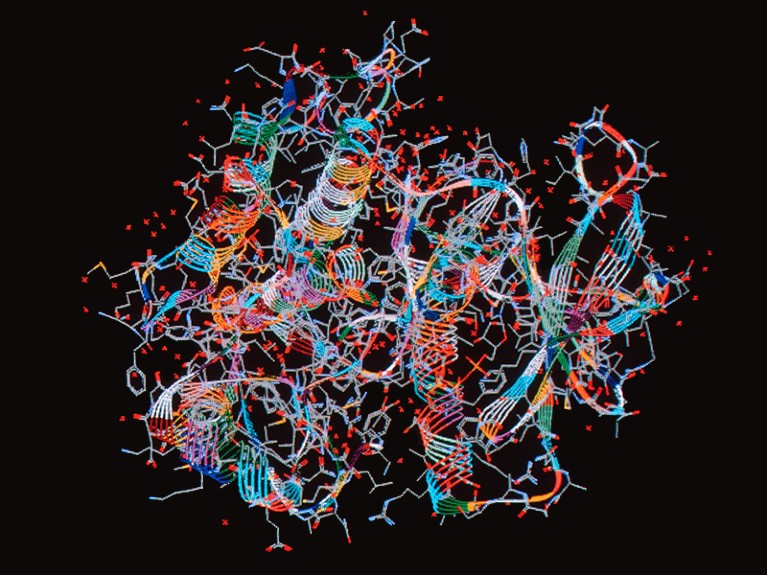

The AlphaFold protein-structure-prediction tool5, arguably the most successful chemistry AI application, uses such a graph-representation approach. AlphaFold’s creators trained it on a formidable data set: the information in the Protein Data Bank, which was established in 1971 to collate the growing set of experimentally determined protein structures and currently contains more than 200,000 structures. AlphaFold provides an excellent example of the power AI systems can have when furnished with sufficient high-quality data.

So how can other AI systems create or access more and better chemistry data? One possible solution is to set up systems that pull data out of published research papers and existing databases, such as an algorithm created by researchers at the University of Cambridge, UK, that converts chemical names to structures6. This approach has accelerated progress in the use of AI in organic chemistry.

Another potential way to speed things up is to automate laboratory systems. Existing options include robotic materials-handling systems, which can be set up to make and measure compounds to test AI model outputs7,8. However, at present this capability is limited, because the systems can carry out only a relatively narrow range of chemical reactions compared with a human chemist.

AI developers can train their models using both real and simulated data. Researchers at the Massachusetts Institute of Technology in Cambridge have used this approach to create a graph-based model that can predict the optical properties of molecules, such as their colour9.How AlphaFold can realize AI’s full potential in structural biology

There is another, particularly obvious solution: AI tools need open data. How people publish their papers must evolve to make data more accessible. This is one reason why Nature requests that authors deposit their code and data in open repositories. It is also yet another reason to focus on data accessibility, above and beyond scientific crises surrounding the replication of results and high-profile retractions. Chemists are already addressing this issue with facilities such as the Open Reaction Database.

But even this might not be enough to allow AI tools to reach their full potential. The best possible training sets would also include data on negative outcomes, such as reaction conditions that don’t produce desired substances. And data need to be recorded in agreed and consistent formats, which they are not at present.

Chemistry applications require computer models to be better than the best human scientist. Only by taking steps to collect and share data will AI be able to meet expectations in chemistry and avoid becoming a case of hype over hope.

Nature 617, 438 (2023)

doi: https://doi.org/10.1038/d41586-023-01612-x

References

- Segler, M. H. S., Preuss, M. & Waller, M. P. Nature 555, 604–610 (2018).Article PubMed Google Scholar

- Struble, T. J. et al. J. Med. Chem. 63, 8667–8682 (2020).Article PubMed Google Scholar

- Kim, K. et al. npj Comp. Mater. 4, 67 (2018).Article Google Scholar

- Yang, K. et al. J. Chem. Inf. Model. 59, 3370–3388 (2019).Article PubMed Google Scholar

- Jumper, J. et al. Nature 596, 583–589 (2021).Article PubMed Google Scholar

- Lowe, D. M., Corbett, P. T., Murray-Rust, P. & Glen, R. C. J. Chem. Inf. Model. 51, 739–753 (2011).Article PubMed Google Scholar

- Coley, C. W. et al. Science 365, eaax1566 (2019).Article PubMed Google Scholar

- Angello, N. H. et al. Science 378, 399–405 (2022).Article PubMed Google Scholar

- Greenman, K. P., Green, W. H. & Gómez-Bombarelli, R. Chem. Sci. 13, 1152–1162 (2022).Article PubMed Google Scholar

对化学家来说,AI革命尚未发生

AI大佬Geoffrey Hinton近期辞去了谷歌职务,离职原因十分明确:畅所欲言地谈论AI的风险。

许多人担心AI走得太远,会有风险。但与这些宏观的担忧相反,在许多科学领域,有另一种观点:AI目前走得还不够远,其中一个代表性领域就是化学。机器学习工具有望在发现、合成新化合物上掀起一场革命,但现实是大规模的革命尚未发生,因为缺乏可用的数据来训练AI系统。

AI系统的好坏取决于它所接受的训练数据。如果化学家想要充分利用生成式AI工具的潜力,就需要建立庞大、可靠且无偏见的训练数据集,要有实验数据和模拟数据,还要有历史数据和来自不成功实验的数据。

以进行逆合成分析的AI工具为例,从终产物开始,然后倒推出最佳起始材料和反应步骤顺序,2018年,上海大学Mark P. Waller教授团队设计的3N-MCTS训练模型可以实现AI对于药物逆合成路线设计,化学界的AlphaGo由此诞生,引起了国内外制药领域高度关注。

为了做出准确的化学预测,AI系统需要对不同反应所涉及的特定化学结构有足够的了解。发现新反应的化学家通常会发表研究结果,但这些结果往往并不详尽。如果AI系统没有非常系统全面的知识,就可能会合成出不正确的产物。

与OpenAI开发的ChatGPT类似,如果AI要在逆向设计中超越现有的计算工具,就需要有足够的化学结构和性质相关的训练数据,需要数十万甚至数百万个数据点。

AlphaFold蛋白质结构预测工具可以说是最成功的化学AI应用了,其创建者在一个强大的数据集上对其进行了训练,这个数据集就是蛋白质数据库(Protein Data Bank,PDB)中的信息,PDB目前包含超过20万个结构。AlphaFold的例子说明AI在提供足够的高质量数据时可以拥有强大的力量。

那么,其他AI系统如何创建或访问更多更好的化学数据呢?一个可能的解决方案是建立一个系统,从已发表的研究论文和现有的数据库中提取数据,比如英国剑桥大学的研究人员创造的一种将化学名称转换为结构的算法,这种方法加速了AI在有机化学中的应用。

另一种可能方法是自动化实验室系统,如用来制造和测量化合物的机器人材料处理系统,以测试AI模型的输出。但目前这种能力相对有限,因为与人类化学家相比,这些机器人系统只能进行相对狭窄类别的化学反应。

还有一个显而易见的解决方案:AI工具需要开放数据。人们发表论文的方式必须进化,以使数据更容易获取。这也是Nature杂志要求作者将他们的代码和数据存放在开放存储库中的原因之一。

但即便如此,也不足以让AI工具充分发挥其潜力。好的训练集还应该包括负面结果的数据,比如没有产生所需物质的反应条件。另外,数据还要以一致的格式记录。只有采取措施收集和共享数据,才有可能让计算机模型比最好的人类科学家做得更好。