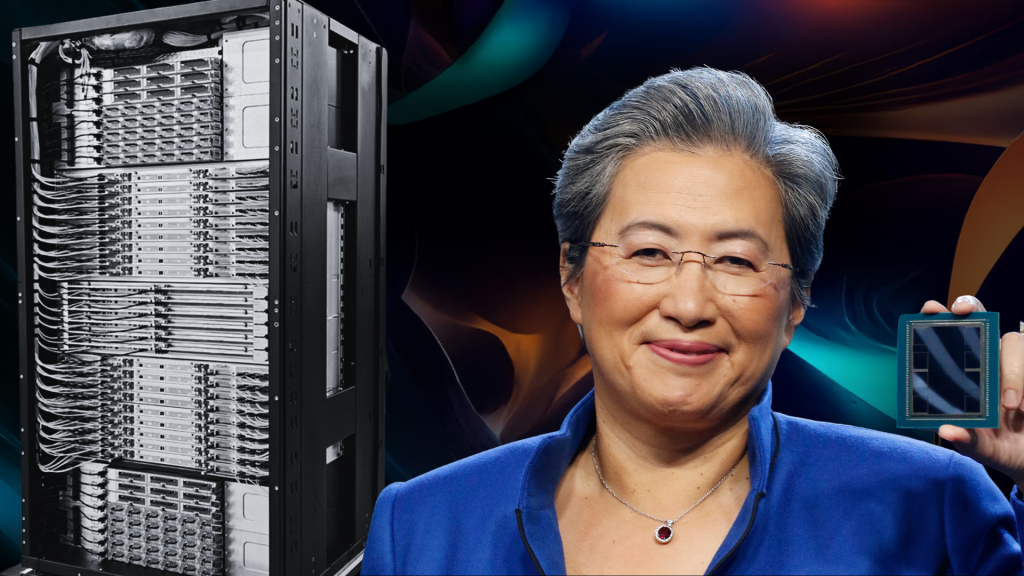

AMD Showcases Its “Helios” Rack-Scale Platform Featuring Next-Gen EPYC CPUs & Instinct GPUs; Ready to Target NVIDIA’s Dominance

来源:WCCFTECH | 发布时间:2025-10-31

摘要:AMD首次展示机架级"Helios"平台,搭载EPYC Venice处理器和Instinct MI400加速器,采用ORW规范与液体冷却技术,挑战NVIDIA的AI霸主地位。

AMD has showcased its Helios ‘rack-scale’ product at the Open Compute Project (OCP), showcasing the firm’s design philosophy for its upcoming AI lineups.

For those unaware, AMD announced that it will be ramping up its rack-scale solutions at the Advancing AI 2025 event, and the company did claim that the ‘Helios’ rack-scale platform will target the likes of NVIDIA’s Rubin lineup. Now, at the OCP, the firm showcased a static display of the Helios rack, which is developed on the Open Rack Wide (ORW) specification, introduced by Meta. The firm didn’t reveal specific details about Helios, apart from the fact that Team Red is very optimistic that the platform will shape up to be a competitive product.

With ‘Helios,’ we’re turning open standards into real, deployable systems — combining AMD Instinct GPUs, EPYC CPUs, and open fabrics to give the industry a flexible, high-performance platform built for the next generation of AI workloads.

– AMD’s EVP and GM, Data Center Solutions Group

Let’s discuss AMD’s Helios for a bit. We know that the platform will feature next-gen technologies from the firm, such as the EPYC Venice CPUs and the Instinct MI400 AI accelerators. More importantly, for networking capabilities, the rack will employ AMD’s Pensando for scale-out. In particular, at OCP, the firm announced that it will focus on an open stack of technologies. For this aim, Helios will utilize UALink (scale-up) and UEC Ethernet (scale-out). Another interesting inclusion with Helios is the use of quick-disconnect liquid cooling, which enables high densities and simplifies field service.

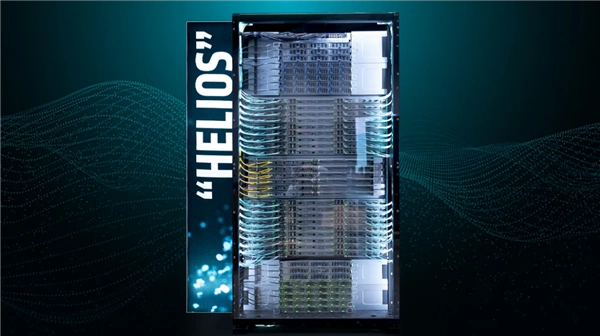

The Helios images shared by Team Red on the occasion of OCP show us a ‘double wide’ ORW rack, with a central equipment bay and service space along the sides. You can also see horizontal compute sleds, which are another approach from NVIDIA’s Kyber, which we discussed earlier, and these sleds account for 70–80% of the rack space. The two fiber-type cables running along are the ‘Aqua’ and the ‘Yellow’ runs, which are used for different purposes, but the routing here is defintely top-notch.

NVIDIA hasn’t seen much competition in the rack-scale segment; however, with AMD’s Helios, things are expected to switch up drastically, with Team Red looking to rival NVIDIA’s Rubin rack-scale platform. The Helios showcase was defintely a surprise, but it also shows that the AI industry is up for massive competition.

冲击NVIDIA统治地位 AMD首次展示机架级“Helios”平台:搭载下代霄龙和Instinct

在AI领域,NVIDIA一直占据着机架级解决方案的主导地位,不过AMD在OCP活动中,首次公开展示了其机架级“Helios”平台,向NVIDIA的Rubin系列发起挑战。

AMD的Helios平台基于Meta推出的Open Rack Wide(ORW)规范开发,虽然目前尚未公布具体细节,但AMD对其充满信心,认为该平台将成为一款极具竞争力的产品。

AMD数据中心解决方案集团的执行副总裁兼总经理表示:“通过Helios,我们将开放标准转化为可部署的系统,结合AMD Instinct GPU、EPYC CPU和开放架构,为下一代AI工作负载提供灵活且高性能的平台。”

Helios将搭载AMD的下一代技术,包括EPYC Venice处理器和Instinct MI400 AI加速器,在网络能力方面,该平台将采用AMD Pensando技术实现大规模扩展。

此外,Helios还采用了UALink和UEC以太网技术,并配备了快速断开式液体冷却系统,这不仅提高了系统的密度,还简化了现场维护工作。

AMD在OCP活动中展示的Helios平台是一个“双宽”ORW机架,中央是设备舱,两侧为服务空间。

机架中还配备了水平计算滑块,这些滑块占据了70%到80%的空间,与NVIDIA的Kyber设计有所不同,此外,机架中还铺设了“Aqua”和“Yellow”两种光纤电缆,用于不同的功能。